How Will the Evaluation of Algorithms Lead to More Equitable Health Outcomes?

“Algorithms alone are not going to solve the massive problems we have with health disparities.” This is the view of Sherri Rose, who is a biostatistician, professor of Health Policy at Stanford University School of Medicine, and faculty director of the Health Policy Data Science Lab.

Rose should know. She has the unique perspective of someone working at the critical intersection of AI, machine learning, and health policy. After pursuing a PhD in biostatistics, Rose trained her focus on finding ways for these new and powerful algorithmic tools to improve health equity.

“I wanted to have an impact on population health through policy, from the perspective of a statistical methodologist,” Rose explains.

Her interest in this burgeoning space led her to establish the Health Care Fairness Impact Lab (a 2022 SIL Stage 2 investment) alongside co-founder David Chan, Associate Professor of Health Policy at Stanford School of Medicine. The lab convenes economists, statisticians, and clinicians who look at how to combat disparities in the ways healthcare is administered to different populations – including systematic delays in referrals and visits for those with certain conditions vs. their non-Hispanic white counterparts.

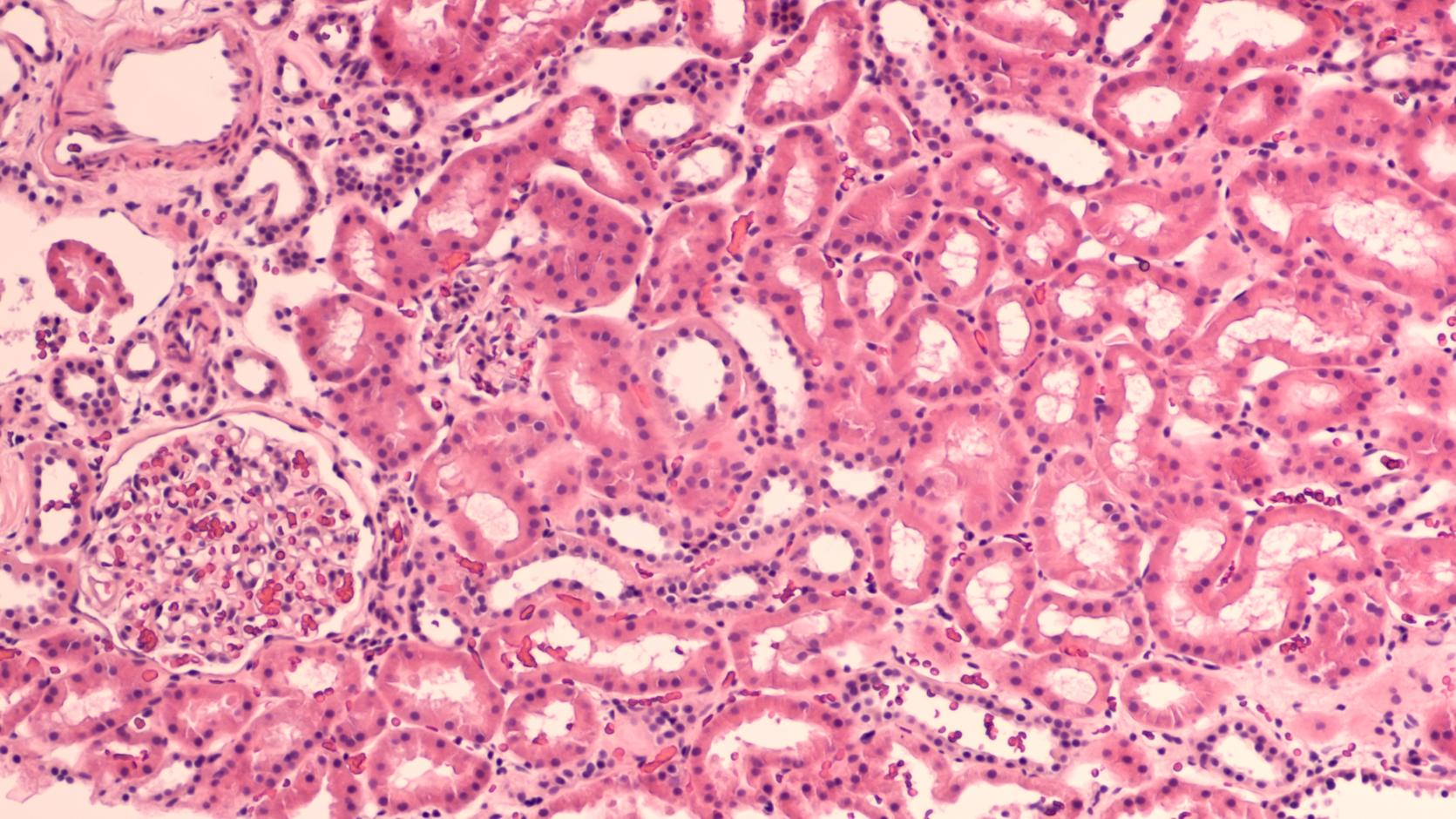

While Chan is studying emergency room settings, Rose has turned to chronic disease – specifically chronic kidney disease (CKD). CKD affects about 37 million adults in America today, with Black or African American and Hispanic patients more likely to progress to kidney failure.

To improve outcomes, Rose and her team wanted to look closely at the algorithms that currently underpin clinical decision-making, including a recent change to the estimated glomerular filtration rate (eGFR) formula for the condition.

Examining the algorithmic formula

The eGFR, as described by the National Kidney Foundation, is a formula “that measures your level of kidney function and determines your stage of kidney disease.” It is critical in helping healthcare teams determine how kidney patients will progress in terms of their treatment, including decisions on nephrology referrals, when dialysis is needed, drug dosages, and even whether a kidney transplant is required.

The eGFR calculation itself uses results of a patient's creatinine test, age, and documented sex. And, until recently, the formula would have also included the patient’s race.

But the inclusion of race had no basis in clinical medicine. Now that we have a better understanding of race as a social system of classification, versus accurately reflecting human biology, it has been acknowledged that the inclusion of race in the eGFR formula can perpetuate bias. In 2021, it was removed as part of an effort to help counter the poor outcomes that can disproportionately impact certain communities, including Black or African American as well as Hispanic patients.

Rose and colleagues at Stanford’s Health Policy Data Science Lab, led by PhD student Marika Cusick, set out to better understand this change and its impact. They examined how eGFR had affected nephrology visits and referrals for these CKD patients in the intervening years. “If a change has been made, we should study it. We should be doing this more broadly and more often,” notes Rose.

The results, which will have surprised some, showed that this update had no impact on patient outcomes for Black or African American patients, who were no more likely to receive nephrology referrals or visits, despite the change to the algorithm leading them to be classified into more severe stages of the disease.

Rose caveats these findings by explaining that her team looked exclusively at data from the Stanford Health Care system (574,194 adult patients aged 21 and older who had at least one recorded serum creatinine value between January 1, 2019, and September 1, 2023) which didn’t yet include the outcomes for transplantations or other longer-term outcomes.

Nevertheless, it is clear that this study, released earlier in 2024, does not set out to undermine the eGFR nor its modification. Instead, the lesson is about the importance of robust evaluation and establishing a meaningful feedback loop for the outcomes of algorithmic tools now deployed throughout the healthcare system. They discuss this and related considerations in a policy brief developed in collaboration with Stanford HAI and Stanford Health Policy. As Rose told Nature in a feature story published this month about the shift to deracialize kidney diagnostics in the U.S., “a change of formula is a big headline… but it doesn’t necessarily solve the problem.”

Focusing on the hard problems of health inequity

Rose believes that if health policymakers better understand the limitations of AI tools when it comes to affecting patient care, they can better focus on the other factors causing inequity – and allocate resources accordingly. These problems are harder to solve than can be achieved with a change to an algorithm, she asserts.

“The significant challenges for kidney disease, many of them are not going to be solved by changing the algorithm. That’s because they are situated within social drivers of health.” Rose offers other factors, like patient access to care and neighborhood conditions, as examples.

The kind of analysis Rose and team conducted is still far from common. In fact, this research was the first evaluation of the updated eGFR formula post-implementation. Rose believes studies like this evaluating deployed tools can be instrumental to changing current attitudes. “[Some people believe] either an algorithm can solve the problems they are seeing, or a change to an algorithm can solve it.”

Such attitudinal change is important because of the broad proliferation of algorithms in health. Rose explains that many different formulas, both simple and complex, are being used daily by clinicians to steer the decisions that impact patient care well beyond kidney disease.

“Patients often are not aware that algorithms are being used–risk scores and additional tools–or what information is being used in them. It’s not transparent.”

Recommendations from the study seek to create more transparency by highlighting how algorithms (and changes to them) manifest over time, including when they fail to have the desired impact.

Building on this research, Rose’s team, led by computer science PhD student Agata Foryciarz, are already hard at work on one of their next projects: a CKD participatory modeling study that asks patients directly about their care and will incorporate those responses to help drive better, more equitable outcomes.

“Frequently, when we think about developing a causal model of a health condition process like kidney disease progression, it’s a bunch of ‘experts’ in a room – including people like me. But patients are also experts in their own health.”

The results of this study, which should reveal more about the social factors influencing how CKD patients get their care, are expected in 2025.

Building a future of research-informed health policy

While Rose is passionate about health policy, she also realizes that there are few established “entry points” for students similarly interested in driving positive, research-informed policy change.

This led her to establish the Advancing Health Equity and Diversity (AHEaD) summer program at Stanford with several colleagues, which focuses on giving college students a grounding in computational population health. She hopes to contribute to lifting up a new generation of tech-savvy professionals with a focus on health equity.

As one of the few machine learning experts working in the health policy field right now, Rose considers herself an “AI realist.” While she has worked extensively to develop algorithmic tools for health, she is also quick to caution against what she calls “overselling.”

“Oftentimes AI methods are actually worse,” Rose concedes. “One of the biggest harms comes from folks parachuting in who have very little knowledge and think they can solve a problem they learned about yesterday.” Frequently, new complex solutions function no better than what they replace.

Instead, Rose believes that better tools will only come from building workable, tailored solutions directly alongside the people who are going to be impacted by them.

Of course, many times the solution to the most pressing problems around access to adequate healthcare won’t come in the shape of an algorithm at all, and Rose wants to advocate for those “harder solutions” around social drivers of health too – particularly when it comes to the allocation of resources. “We want to focus on solutions that are genuinely grounded in the potential to improve health equity.”

Open Question is a series of stories about innovative, solutions-focused research shaped to tackle pressing social problems.